Independent Practice

- Taylor H

- Nov 24, 2020

- 75 min read

Updated: Jan 12, 2021

I was (and still am) very excited about this project when I was introduced to it, because it allows me to focus on things that I am interested in, and gives me free reign to make whatever I want. It also piqued my interest because we were asked to submit research, development reports, and preparatory work rather than a fully resolved thing. This is quite different than what I am used to, but I think it will be good because it turns the research and preparatory work into the final pieces, allowing me to zone in on the finer things and making complete pieces as "tests". I think that I will still work towards a completed piece, even if I finish it after it is due. I just think it would be more satisfying to have the finished thing rather than just plans for what it could be.

I D E A S

The first idea that I thought of for this project comes from a 40-minute video on how to make your own hologram. The entire video is brilliant to watch and the end product that the dude comes out with is incredible. I watched this video a couple of years ago and I've wanted to make one ever since. I feel like this might be a cool thing to try.

The other idea was to make a playable experience on the computer. I can make 3D models and texture them using photographs I take. There are concepts of post-photography and internet art in this idea, and I'd like to explore different ways of presenting work, specifically over the internet.

I think that personally, I like the idea of the playable experience, just because the hologram seems quite one dimensional, but the other idea seems a lot more colourful and I think I will be able to go down many more avenues of interest than just making one final piece. I feel that it would have been better suited to the experimental practices unit last year, but for a project-based around research and prep work, something that has a lot more opportunities for those things would be better. Plus, I think that I can relate this with my dissertation as well.

I think that I want to keep the area of interest of this project parallel to the dissertation I'm writing alongside it. Not only do the ideas fascinate me, but it will be easier for me in my head to focus on one thing. Long story short, I want my dissertation to be about nostalgia for aesthetics, post-internet and internet art, and how people respond to it in photography. I've been enjoying reading about all these topics and seeing how they all link together.

A P L A Y A B L E E X P E R I E N C E

Recently, I have seen a lot of indie games that have been released that try to emulate the effects of PS1 and N64 graphics and textures. I was interested in this of course because I loved playing games on those systems as a kid, and I still do to this day. The games even go as far as to emulate the shortcomings of the graphics processors and also taking the same shortcuts that developers had to take to make sure the game could fit on the system. I would say that this is entirely powered by nostalgia. Not for anything specific in the game, but for the aesthetic that the game presents. Since I have been looking into this idea intently for a while now, I connected the dots in my head and I wanted to respond to it somehow.

Screenshots from "No One Lives Under The Light" on Steam: https://store.steampowered.com/app/1254370/No_one_lives_under_the_lighthouse/

Screenshots from "The Red Planet" on Itch.io:

Screenshots from "Ooer" on Itch.io:

Screenshots from "Beneath a Withering Moon" on Itch.io:

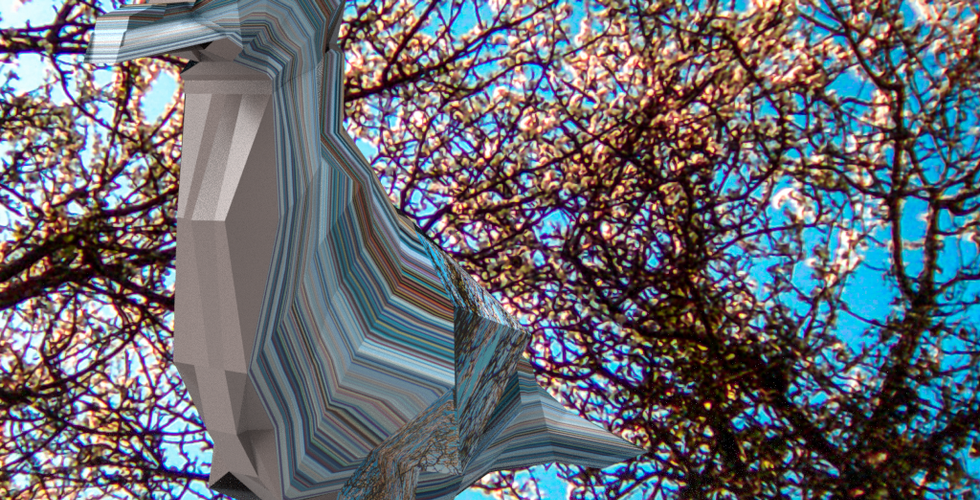

The way that I want to relate it to photography is to take photographs and use them as textures, the idea for which came from research I had done into Post-Photography. I specifically looked into Emanuel Rossetti's series of donuts that have been textured with photographs of stone surfaces. The work isn't about the images anymore, the work is about the fact that they have been transformed into a different shape. It's a great project, and I think that using this for inspiration alongside the PS1 aesthetics and having the objects be interactive in the experience adds a whole new dimension to it.

Emanuel Rosetti's Donuts

Since the textures in PS1 and N64 games are low resolution and pixelated, I plan to use primitive digital cameras from the early 2000s to take the images. I use these cameras for the same reason that the game developers decided to make their game like a PS1, it's that sweet nostalgia. I like shooting with them because I enjoy the imperfections, how their compression algorithms and the colour processors work, and how it reminds me of what photos used to look like. Growing up with the internet, I was exposed to a lot of images on image sharing sites, and all of the photographs were taken with these early digital cameras. Something is appealing about it to me, and I've found that I can be expressive with the low-resolution look that they provide.

Someone who is a great example of this is a YouTube channel by the name of Lazy Game Reviews. Clint Basinger, the guy who runs the channel talks about old technology and stuff from the 1980s to the early 200s. He reviews video games like Lego Island, Black and White, and Cross Country Canada, to name a few. He also talks about software, like this photo editing funware from the 90s. Another interesting topic he covers is old hardware, like old monitors, building custom Windows XP PCs, or old computers. There are many other different things he reviews too, but it seems that these three topics are his main area of interest. My favourite group of videos he’s done however is where he talks about old digital cameras. The videos would go into depth about the specific camera’s history, and how well they held up over the years. He will also showcase photographs that he takes with them, sometimes linking them in the video description. A lot of times these cameras aren’t easy to use. This isn’t an issue for him however, when he spoke on the topic of how terrible the nick click camera is he said “With the Nick Click it’s sow low res and awful that it’s kind of an exercise in futility. The sheer unpredictability of each resulting shot makes this curiously enjoyable to use though, with everything being a complete crap-shoot as to how it will look; from exposure, to focusing, to white balance, to whether or not you can line anything up with its absolutely awful viewfinder”. I agree with him here, as it sort of invokes a difficult process, which is the same reason that I enjoy film photography and printing; how it’s all a thoughtful process and a lot of it is up to chance.

The cameras he uses don’t provide the clearest, traditionally “good” photographs, but this isn’t an issue for him either. On the topic of hardware failure making itself present on the images he says: “When it comes to retro photography, I don’t often reach for a camera that’s going to provide crispy, high-res reproductions of reality. If I want to do that, I use a DSLR or my phone. But, if I’m going to go retro, I often go for something that uses obsolete media, or something a little bit fallible, [*], that produces unpredictable results”.

Assorted images I've taken with old digital cameras

Images taken by Lazy Game Reviews with a Nick Click:

Images taken by Lazy Game Reviews with a Canon RC-250 Xap Shot:

Around the same time as I had the idea for a playable experience, my housemate Josh asked if I could wire him £300 for an Oculus Quest (I'd be paid back in a week, of course). I agreed since I wanted to try it out as well. Later on, I realised how stupid I would be to not take advantage of it and use it for my project. Having played a few VR games like Waltz of the Wizard and Boneworks, playing around with objects with a VR headset on feels completely different to just waving it around with a mouse. It obviously doesn't literally feel like you're holding it but there is a cognitive thing where it still feels like you are the one moving it around and launching it into the distance. I feel like this addition might not be too difficult to implement (the internet is home to many tutorials), and it will add an extra layer of immersion that would not be there otherwise. It also amplifies the post-photography idea because you feel as if you are interacting with my photographs personally.

Another idea that I had that might take the experience to the next level is having a camera that you can take your own photographs with. This would be amazing for my project because then it is letting the audience take my images and re-purpose and re-appropriate them to their heart's content. Then not only is the experience about my images, but it's also about creating your own. This also plays into the idea of image sharing over the internet, and it also toys at ideas of photographing exhibition spaces and the materiality of objects (real or fake). The camera will output the shots into a folder that you can view and share around at your own will. I really think that this camera idea is going to be the cherry on the cake so to speak, and it would be really great if I manage to get it working.

I found an asset pack for Unity that lets me achieve the same look as a PS1 game. If you watch the videos below, you'll see the length the developers went through to make everything look "worse". It's kind of perfect, and I'm sure that the devs that are making PS1 type games are using this exact pack. I am fully aware that this Nostalgia For Aesthetics thing could be a red herring, as it doesn't exactly fit with the "illusion of reality" idea that I'm going for with the VR, but I don't think it necessarily has to. I see it more as an aesthetics choice. Plus, I've mentioned before that cognitive dissonance is a huge part of VR, so things don't have to look real at all to feel real. If you give someone 5 minutes in any environment or art style, they'll adapt to their surroundings and it'll begin to feel normal. Plus, nostalgia is a huge proponent of Internet Art, which this project is heavily themed after.

The only issue with PSXEffects is that I haven't decided whether I'm going to be using Unity or Unreal Engine, and PSXEffects is only for Unity. Looking at both my options, it was difficult for me to pick which one I should use since they were both entirely new to me. But, I got some help from a few people on the Games Design course and they told me to use Unreal Engine. This is because I'm a complete beginner, and Unreal has a coding system that is visual-based, so there's no coding knowledge necessary. Plus, there's a ton of pre-made assets that come with Unreal that will allow me to achieve the things I want to with relative ease. I just hope I find a PS1 shader for Unreal Engine 4.

Another thing that I hope exists is the ability to create an in-game camera in Unreal as well, which might be a pointless worry since there's most likely someone out there that's figured it out.

I also found some asset & shader packs for Unreal that give you that sweet sweet PS1 aesthetics! They both look pretty convincing, but I want to compare to see which one I'd like to use best.

The first one I came across was called Oldskooler. It's nowhere as good as the PS1 shaders in the Unity based PSXEffects, as it has a limited amount of object rendering options. You'll see what I mean when you watch the video below. Don't get me wrong, the effects they have are very well made and they look great, but it doesn't really have what I'm looking for when compared with the aforementioned PSXEffects. There is also a price tag on this pack, with the not so measly $19.99 (£15). To be honest, when I was asked in my project proposal about things that I would need to set a budget for, shader packs would have been the last thing I would have thought of, but since they're culminations of people's hard work (and it's not like I'll only use it once) I don't mind. However, I don't think this pack is where that money will go, since again it doesn't provide the aesthetic that I'm looking for. I do like the slogan that they went with though, "Yesterday's Limitations, Today!" because it puts what I've been trying to say with what people do with nostalgia in three simple words.

Oldskooler Demonstration

The other shader pack (and the one I'm going with) Is called PSXFX. Not only is it similar to the Unity pack because of the name, but it also is breathtaking on how accurate the emulation is. Whereas the previous pack focused on the rendering of 3D objects, PSXFX goes as far as emulating dithering, draw distance, vertex snapping, near clipping, and depth errors, which are all limitations of the antiquated hardware. The fact that they programmed these pseudo-glitches so perfectly that it looks like it is running on an actual PS1 tells me that it's dripping in nostalgia. Why else would people put errors and glitches in their game on purpose? This pack is $32.72 (£24.55), which is significantly dearer than the previous pack, but since I'm certain that another pack like this doesn't exist or isn't as perfect, and since it's Christmas next week (as of writing this), I don't mind treating myself to it. Also, the person who goes by the alias "Marcis" has a tutorial on how to use it, and according to the comments on their itch.io site he has a discord that you can join if you need any help with it, and apparently, he's they're super kind and helpful. Sounds like I couldn't have found a better situation here.

PSXFX Showcase

I N T E R N E T & P O S T - I N T E R N E T A R T

I've been interested in these sub-cultures of art for as long as I can remember. They're art movements that are both completely drenched in memories of a culture that I grew up in - late 90s to early 2000s internet culture. As similar as they are however, it's important to distinguish the two, to see how they both operate and what they are both used for.

Internet art is a form of artwork that solely takes place online. The work is made online, and then distributed using the same thing it was created from. This is an art form that has entirely circumnavigated the need for gallery or museum spaces, respectfully spitting in the face of tradition. Many artists have used Internet art as a method of playing with the concept of the white cube gallery, presenting work in different, online ways, which are usually lauded for their interactivity since the online realm serves to include that in as many things as possible.

Assorted Examples of Internet Art

One great example is Artie Vierkaant's "Image Objects", which is paired with an essay they wrote aptly titled "The Image Object Post-Internet". (By the way, I'm aware that this is more of a Post-Internet work than an Internet Art one, but it fits perfectly with common idea that both movements share of playing with gallery/exhibition space and exploring different ways for the audience to consume the works and how that changes the perception). The work is made in Photoshop originally, where Artie makes gradients and polygons. He then UV prints them onto sheets of Dibond composite boards, and exhibits them in a traditional gallery space. He then takes photographs of these works to create an exhibition documentary, which he then alters further in Photoshop, to then go on to release the images online. He then exhibits these, and the cycle continues. This makes the work exist in two states in the exact same time, both physical object and internet-mediated image. The reproduced versions of the original work becomes equally important as the original work itself, potentially becoming the main aesthetic and overshadowing the main project overall. This work has inspired many to play with this new idea that exhibition documentation’s objectivity and representational abilities can be challenged and questioned. It seemingly turns the internet into an exhibition space itself, where the images of the art becomes the art itself, and the various image sharing websites become the blank white walls of a gallery. Artie also invites outside parties to join in, making their own changes to the exhibition photographs of the Image Objects. This anonymous collaboration is celebrated by him too, saying “the things that get me very excited are when a piece gets acted upon by someone else, instead of simply resharing”. this insinuates that the internet blurs the lines of authorship, welcoming and encouraging the creativity in other people. Through the many alterations that Artie and all other participants have made, the documentation of the work becomes just as important as the work itself.

Artie Vierkaant's Image Objects

Image Objects iOS Alternate Reality App

Post-Internet art is a little different to Internet art however, focusing on the influence that the internet has had on culture, aesthetics, design, and society itself rather than just using the internet as a muse. Internet art can be included in Post-Internet art, but not really the other way around since there can be art that pertains to the internet but wasn't necessarily created on it. A great example of a sub-culture that came about in a very post-internet way is Vaporwave. This is art that is also coated in nostalgia, with the art style's main operative function is to include signifiers of the early 90s/early 2000s internet landscape, including things like 90s Synthwave imagery, Windows 95 windows and icons, and other items generally considered "aesthetic", like Japanese text, Arizona Ice Tea, Fiji water, and Ancient Roman busts carved out of marble. Vaporwave predominantly started out as a music scene, where the music emulates the visual style it was accompanied by. The music itself consists of slowed down Jazz, RnB, and Lounge music samples from the 80s & 90s. Both the visual and musical stylings reflect the post-internet ethos very well, highlighting the internet's impact on consumerism and poking fun at capitalism all while looking totally aesthetic, dude.

Assorted Examples of Vaporwave Art

Both of these art movements also allow and in some cases encourages image appropriation as an art. It's a little contentious whether appropriation is a valid art form since one artist can submit someone else's work with little to no alterations, but I personally think that it's ripe for artistic potential, since changing the context surrounding an image can change the perception of it entirely, which makes it a different piece of work entirely. One example that I can think of is work from an artist called Katja Novitskova. She takes vapid images she finds on the internet and transforms them into cardboard cutouts. She then photographs them, making her work here quite similar too Vierkaant's work. She documents these cutouts in a specific way, which transforms the images entirely. She shoots the cutouts straight on, which removes the notion of space and parralax, making the images appear as if they are pasted on top of the background. This calls the objectivity of the work into question, making the work less about being found photographs or being cardboard cutouts in an exhibition space, and more about how the link between photo and physical space is removed entirely. These cutouts are themselves exhibited, and a result of this has been people posing with the objects and uploading their own images with them in. This has become an integral part of the work, using the people's self-inserting documentation of the work as marketing and as a curatorial practice.

Assorted Images by Katja Novitskova

Another artist (who happens to be a favourite of mine) that I believe to use image appropriation is Jon Rafman with his 9Eyes project. The entire idea is to use Google Streetview for Jon to go on long "walks", and the aim is to find interesting, weird, or funny situations. He then screenshots them, and presents them on his blog as a form of street photography. This is a great example of image appropriation because Jon didn't take any of the images he uses, they're all taken by the 9-lensed camera atop the Google cars. He reframes them though, which transforms the image from a utilitarian one designed to document the world into a candid street-photograph, which is inspired by sublime imagery, however he seems to only capture situations that seem funny to him.

Assorted Images from "9Eyes"

I think that Internet and Post-Internet art is an incredibly rich area that can supply me with a lot of inspiration. It is heavy with it's reliance on nostalgia too, which is a theme I aim to include. Internet and Post-Internet would not work and be as successful as it is now without my generation, without the people to look back on the time that they started out in and remember it fondly.

T R Y I N G O U T D I F F E R E N T 3 D M O D E L L I N G S O F T W A R E S

Even though my initial idea was to use Autodesk Maya right out of the gate, I wanted to try a few others just to see which one suited me and my ideas best.

ADOBE DIMENSION

The first one I tried was Adobe Dimension. Our university has extended an Adobe Creative Suite subscription to us, which includes this software. I hadn't seen or heard anything about this program before, so I was excited to try it out.

So, as it turns out, this is for taking already made 3D objects and applying textures and premade bump-maps and combining them with 2D images to create renders. I would assume that people in advertising use this the most, although I have seen examples of people making art with this program as well. I will keep this program installed because it might come in handy when I want to texture my own models.

But, saying that, I still wanted to see if I could still make something with it.

I placed in a default torus shape and slid a few sliders around to do with how many sides the torus had, and I converted it into a standard model and wrapped an image around it that I took with some old digital cameras of mine. I wanted to do a few tests this way just to see what the low-res images look like on objects, and also to give Emanuel’s donuts a nod.

When the image is placed on top of the 3D model, it places it as if it was a sticker. On the right-hand side of the screen is a menu where you can play around with the image a bit. First, I click on the drop-down menu and change Decal to Fill. This turns your image from a sticker on the shape to fully covering it. Then, you can play with how the image is offset, and how many times you can repeat it.

The first batch of tests (above) I did was with images taken with a Samsung Digimax 301. The first three weren’t as successful but after I edited them to make them 100x bigger (so that they were pixelated a bit) before making them a texture, it made a huge difference. I started to see what I was thinking in my head – a PS1 style low poly model with a low-res image as a texture.

I think that with the more complex 3D models I make, I will splice together images over the texture map so that it looks a lot more organised and like I meant for it to look a certain way.

I also tested out some images from the camera on my old Samsung Galaxy Y, with the camera whacked down to 0.1 MP. I also did some low quality renders as well, to see what it would look like. The only real difference that I can see is that it’s a little grainier. It does look cooler to me.

High Quality (Slow)

Low Quality (Fast)

BLENDER

There's also a Twitter account that I've found recently that I have been enamoured with, called @lowpolyanimals. They take assorted animals from older video games and take them out of their context and curate them all on one Twitter page. This is a crazy cool mixture of nostalgia for the games and the classic image-sharing trope of the cute animals. I think I'd be interested in responding to this, by attempting to recreate some of the simplest ones.

When I opened Blender, I truly knew I was out of my element. There are cryptic icons and puzzling menus that I am hopeless in navigating. Luckily, YouTube user Grant Abbitt was at the rescue with his tutorial on how to make Low Poly Animals in Blender. Hopefully, I can get the basic idea of how to make these things in the 12 and a half minutes of the video.

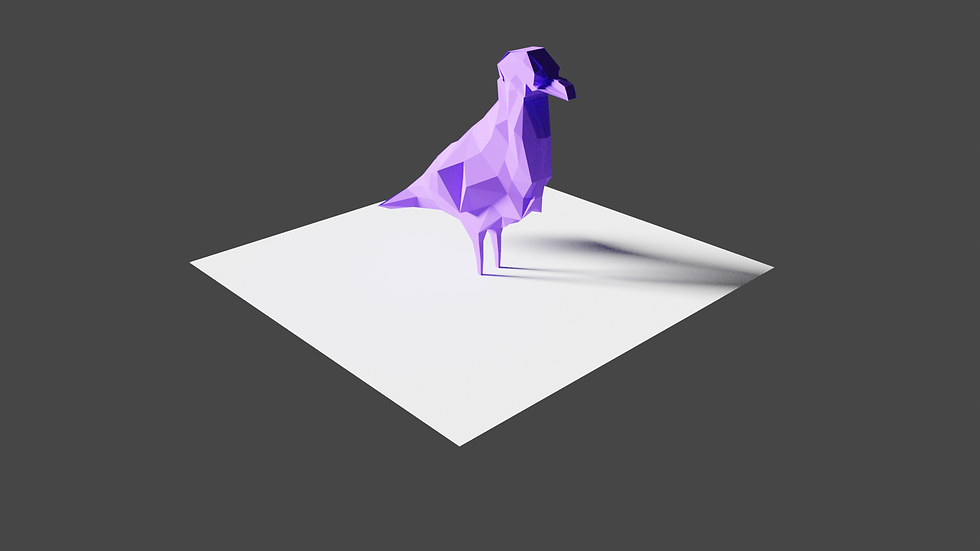

After watching that video, I was genuinely surprised that I could follow along. It turns out that it was only part one of a two-part series, so I intend on watching the second straight away. Instead of using a giraffe like the guy in the tutorial, I based mine off of a stock photo of a seagull instead.

I pretty much got the gist of the second video pretty quickly, as it was just adjusting vertices and sides until you got the shape you want. As easy as the act of dragging them around was, making sure they were perfect and had no gaps were pretty difficult. Plus, I'm still getting my head around all the keyboard shortcuts.

After fumbling about and following the tutorial as best as I could, I ended up with a final render. The tutorial didn't give me a way to apply a photograph as a texture and I couldn't figure one out myself, but I'm still quite impressed and surprised at the way it came out. So far this has been the most challenging software, but that's because I had to make the 3D model myself. And yes, I didn't do the feet on purpose.

Final Render

Just because I was curious to see what the bird would look like with in image over it, I exported him as a .obj file and imported him into Adobe Dimension. I applied the texture and then imported the same image as the background. Again, with more complex models like this, I intend to be more deliberate with photo placements, making it actually resemble what it is supposed to be. This is just for testing purposes, plus I think it all looks real neat.

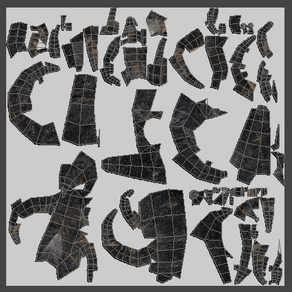

DUST 3D

I hadn't heard of this software before, but when I was looking up "Best 3D Modelling Softwares for Low Poly Models", there was an article singing its praise, so I decided to try it out. I found a video titled "EASILY MAKE 3D MODELS FROM SCRATCH! - WITH THIS FREE OPEN SOURCE TOOL 🤩", so hopefully by the end of it I will be just as excited.

Just from watching the first six minutes, it already seemed like it was a lot more intuitive than Blender. There are drag and droppable nodes each with settings that affect its shape, rather than extruding and shearing and hoping nothing is clipping into itself. Actually, to remedy the overlapping issue, they made vertices and sides snap to each other when objects are close, making it a full object with no gaps.

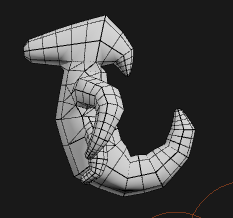

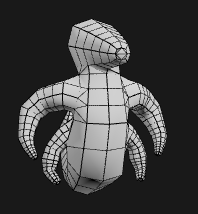

As good as it looked to use, I actually found it pretty hard navigating the 3D space in this program. I think the bare-bones-ness that it has is good, but it leaves a lot of space for confusion. I was following the tutorial and everything made sense, but nothing was really working. I attempted to make a swan, then a frog that somehow turned into a terrible dinosaur creature out to steal your family. The software comes with examples so I know that it is possible to make great animals in this software but maybe with some practice I could get there. Again, you can export it as a .obj and use it elsewhere.

I think that this manages to create a low-poly look a lot better, as if it was made for it or something. if you take a look at the images where I applied images to them, you can see that there are more chunks where images are visible, whereas, with the bird from Blender, there was a lot more stretching.

Overall, Blender gave me the best looking outcome in my opinion, but I'm not certain if that's because I got a better tutorial for it than for Dust 3D. Since Dust 3D is a lot simpler to use (despite my struggles with it), I think I will attempt to get better at both, and maybe compare once I know a little more about what I am doing. I think that Blender would be better for more complex, geometrical, and perfect shapes, but Dust 3D is for quick, dirty 3D models that you can spit out in seconds.

Again, I still haven't been able to apply textures how I wanted, by being able to squash the 3D model into its flat shape (think of it like if it was all unfolded) and apply certain photographs to specific areas so that the objects can be more cohesive. Like I could have different textures for the arms and the body for the dinosaur, add in some extra details, and just not make it look like I vinyl wrapped it with an image. From what I've seen, Autodesk Maya has that exact feature, but I'm still waiting on that confirmation of status from the uni so I can try it out.

What should I do moving forward? I think I want to focus more on the photography side of things more in the future because in the end, the models aren't supposed to be masterpieces, they're just supposed to be recognisable. I can achieve that better through the texture photographs than with the model better I think. Maybe also looking into other ways of creating 3D models could be good too.

A fun exercise could be to take a landscape or a still life, then recreate it in 3D. each model's textures are individual photographs taken of that object and mapped to its respective self in 3D, then do a side by side comparison to see how well you've done. Or similarly, get some screenshots of old video games and try and recreate them in the same way. I think this exercise will help me get a little better at making 3D models, and it will start getting me to think about how I want to take these texture photos.

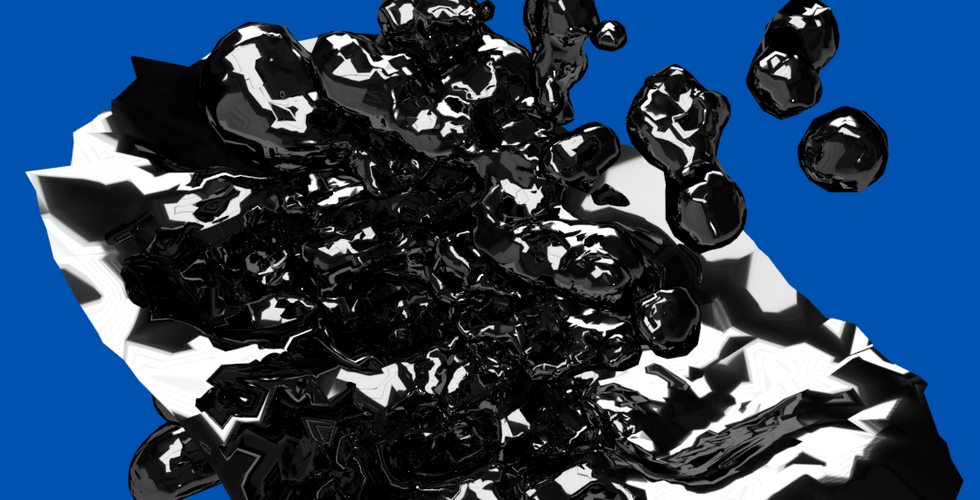

E X P L O R I N G A R T I F I C I A L I N T E L L I G E N C E

I've been a huge fan of image generative AIs and just AIs in general ever since I properly dove into them for my Vision + Communication project last year. The software I used was called RunwayML, and it has a huge collection of open source AI programs that you can use to your heart's content. Well, more like your wallet, since it all runs on remote servers that cost money to keep running. Since everything is open source, they decided to charge for the power you use. You donate a sum of money and as you use the AIs it slowly trickles away. I think I'll invest £10 since that did me just fine when I last used it.

The software has undergone a few updates since I last booted it up, and the UI looks a lot better. There are also many new AIs in the selection for me to mess around with. I scrolled through the entire list of AIs they had on offer, and I singled some out that I think relates to my ideas.

BigBiGAN

The first one I wanted to check out was BigBiGan. I used it in Vision + Communication to create a Lovecraftian looking self-portrait series, and the way it spits out your image after it's chewed it up is incredibly fascinating to me. If you'd like to learn about how it works, you can read my best understanding of it on the blog post I did for it. Or, if you want to read the writing of someone who knows what they're talking about, Google it.

I mainly wanted to see if they had updated BigBiGan in any way, but by doing tests it seems like the outputs are very similar to the ones I got before. The only difference I noticed was how fast it is now compared to when I used it last. I used some images from a previous shoot using an old DVR camera and this is what I got:

The photographs before being put through the AI...

Their Respective Results.

As you can see, the image it spits out is super low res (256x256 px), which isn't a problem with what I'm doing, but there is another AI I used last year called Image-Super-Resolution, and it's still available. It's an upscaling AI, so it takes lower resolution images and attempts to make them higher res. With a normal photograph it looks pretty normal, but with such a tiny image, the upscaling results in a weird, painterly, leathery texture that looks fairly interesting. I don't think that the look would fit in with the objects though, so I might skip on it.

I can only really think of one thing I could use BigBiGAN for moving forward - running texture photographs through it to get variations of the same thing. This way, I could turn Blue_Metal.png into Blue_Metal 1 through 100 if I wanted. Or, since the images it comes out with are square, I could turn them into repeating textures for water, ground. sky, whatever. I might have to edit them to make sure they tile satisfyingly but that should be manageable.

MidaS & DenseDepth

These AIs are all about trying to calculate depth. You put in an image, and it turns it into an eerie black and white image where the closer something is, the brighter it is. I think that this could be useful because I could use the outcomes as bump-maps over the surface of a 3D shape. For example, I take a photo of a concrete path and run that through one of these AIs, then texture the 3D object with the photograph, then apply the bump-map over the top.

The first one I tried was MidaS. I was expecting to see a bigger level of detail, but the examples they gave were of still-life scenes and interiors and I put in architectural shots so I might have been stressing it out there. Plus the images aren't the highest resolution so some information might be getting lost there.

Images Before

Images after MidaS

It looks a lot like the negatives of a poorly made pinhole camera, which is quite endearing because I love making those.

After MidaS, I was interested to see whether DenseDepth was any different. I was quite surprised to find that it was. It seems to have a better time with detail, and it's also inverse colours to the previous one, making it look like a positive from a very primitive camera.

Images after DenseDepth

I really like these images, even if they were just simple tests they are very sinister and spectral. Just for fun, I brought the images from both AIs and combined them in Photoshop by bringing them together as layers and set the blend mode to Difference. I like these a lot more from an artistic standpoint, the contrast is more dramatic and makes depth more visible too. They also look like they've been solarised.

Speaking of, I hit Solarise on them just because. It took the brightest parts and turned them dark, which just adds to the hauntingness of the images.

Again, I'm not going to use them as textures, but we can appreciate them as bump-maps behind the scenes.

Colorful-Clouds

From here, I ran out of AIs that take images and give you another, the rest are just AIs that generate their own. I found this one called Colorful-Clouds, which just generates an image of some clouds. I was thinking about using them as atmosphere, there are tricks to make realistic looking clouds with just an image in game-making software, and there's most likely a tutorial somewhere to do with it.

So those images weren't as I was expecting, The database that the AI draws from is of photographs of clouds, so it's creating its own based on them. They don't really look like I could use them for skyboxes or even that trick I mentioned earlier. They do look pretty though.

Brutalism_Generator

This AI is like Colourful Clouds, except instead of being trained on clouds, it's trained on photographs of Brutalist architecture. I really enjoy this kind of architecture, so I was drawn to it. Plus, I could use a similar method for how I made my seagull with these, I could bring the randomly generated Brutalist building into Blender and create a 3D model based on it. That way I could create outdoor and metropolitan areas in the VR experience.

These look pretty cool, They resemble 1940's black and white photography because of the colours and the overall quality of the image, and the anomalies in the generation look like some otherworldly floating architecture. They also have an eerie quality to them. I think that they would be a little too difficult for me to recreate in Blender, but I might have an easier time in Maya as soon as it becomes available to me.

Textures_DTD

Textures is an AI that just seems to generate its own textures. I think this could be useful since it could save me from having a lot to shoot, or it could even inspire me to take photos of things I didn't think about before.

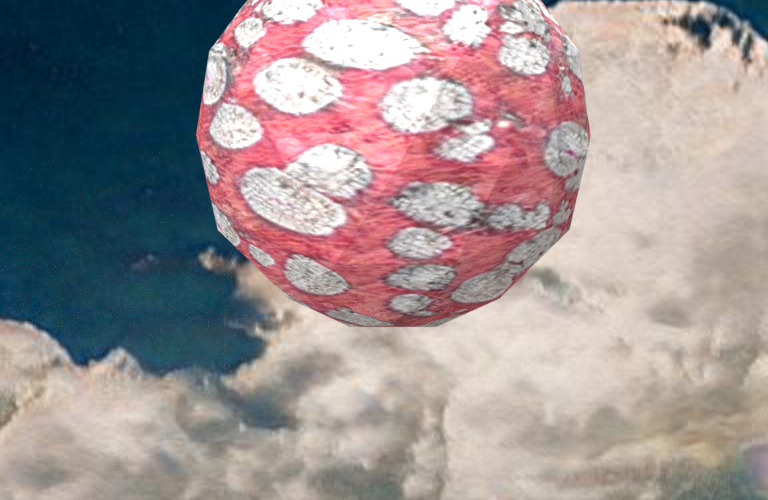

I am really impressed with what I saw! these textures look really great, plus some of them look quite realistic as well. To see what these looked like on 3D objects, I reopened Adobe Dimension and applied them to spheres, with the Colourful Clouds in the background.

Looking at these textures mapped to a 3D model, I can now say that I would definitely use them. A few of them even pass off as photographs, which almost legitimises the strange AI-esque textures, and makes them look slightly real as well. Cool!

And that's all the AI's I tried from Runway. Either others didn't have anything to do with what I was doing (movement trackers, text-based AI) or I just wasn't interested in them (style transfer AI). I found another AI called Monster Mash which turns 2D drawings into animatable 3D objects, but there isn't a demo available at this time.

F I N D I N G D I F F E R E N T W A Y S T O C R E A T E 3 D M O D E L S

While finding Monster Mash, I was also looking up different ways of creating 3D models, just to see if there is a way I can get reliable, good looking results just by inputting images. I found one that looked promising, Selva3D. it professed to allow you to input a single image and it will generate a model for you. Sounds great, but also too good to be true at the same time. To stress-test it a little bit, I put the Brutalist Buildings that I generated earlier through it.

To start, I was asked to create an account, so begrudgingly I did. Now that they know my full name and email address, I just have to wait until they send the confirmation email so I can use it. Thanks, Selva.

I know some apps allow you to take several pictures of something in a circle and it will try and generate a 3D model out of it, so maybe I could try these. My only issue with the mobile apps is that they are very quick to say no to you. If something doesn't work then it just tells you that it failed, rather than just trying anyway and seeing what happens. I feel like computer programs would have a better time with that since their computing power is a lot higher.

VisualSFM

I found one! VisualSFM! It allows you to upload an unlimited amount of photographs and it'll just generate something for you. It was having trouble with the five images I generated before, so I went on a bit of a spree and saved a ton. Hopefully, then it'll find similarities and attempt something. So far it's just said no to me, which is disappointing. I generated 100 individual buildings, and now I run them through the program again, just to see if there are any similarities at all. Since they all look similar in some way, I am hopeful this will work.

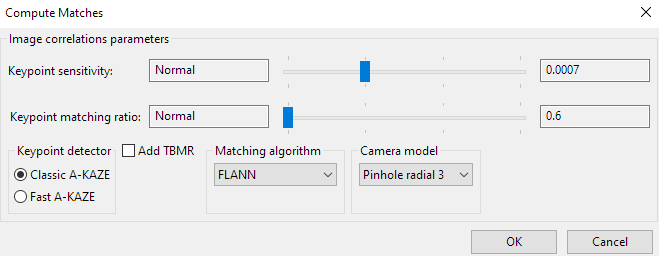

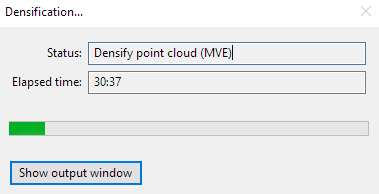

And success! I think my issue before was that I didn't extract the program from the zip file before I ran it, but now that I have, I hit the Compute Missing Matches button, and now it's going through each combination of images to see where all the similar points are.

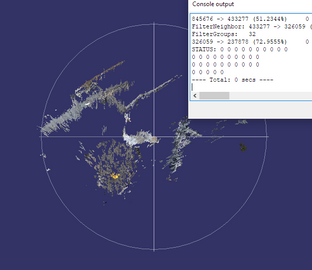

17.133 minutes later, we're done. I then click Compute 3D Reconstruction and see what happens. I was presented with a few dots on my screen. I'm not sure what these are, but I exported it anyway.

So it's just exported it as a set of points, there was no side or face information there at all. I think that it tried, but it didn't exactly work. To be fair, my biggest gripe with these programs is that they just refuse you sometimes, whereas this actually gave me something. Perhaps I should try this again, but properly rather than with AI-generated buildings.

A S K I N G M Y S E L F W H Y

After one of my tutorials, I was asked a very simple yet challenging question. Why? Why am I doing what I'm doing? At first, I thought that I didn't really have an answer and that I only wanted to do it because my housemate bought a headset recently. However, I won't get any marks for that so I had to think a bit more on the matter. The reason that I'd like to use VR is because of the immersion that I felt when I tried it out a few times. The simulated physics grants a strange cognitive dissonance where you know that you are in a virtual world, yet it feels as if you are manipulating the things around you yourself in real life. I feel that this trickery of fooling people into a false sense of reality opens many doors and allows me to explore certain sociological ideas as well. For example, the illusion of reality can be used to induce emotions, like creating a sense of impending doom, severe anxiety, or a gutting sadness. This is due to your perspective being mapped and ported into the game, so in a literal sense, you are being brought into the digital world, or at least, the VR headset is a window into that world. I think that VR could also combat the issue that people have when comparing seeing work in an exhibition VS online, they say that seeing the work in person is far superior because you can really get the scale/texture/colour/ etc. better. Since those are all things that can be played with in 3D software, we can create a pseudo version of that, a remedy for those who can't make it to exhibitions but really like seeing art up close. I also think that it will give the audience a much larger sense of ownership of the images that they take with the proposed in-the game camera, as it will feel like you are taking the photograph yourself.

I genuinely feel that VR is such a visceral experience, and it can be used to convey messages incredibly well. People don't need to put the effort in of having to relate to a character or a story if everything plays out from their own literal perspective.

P L A Y I N G W I T H T E X T U R E

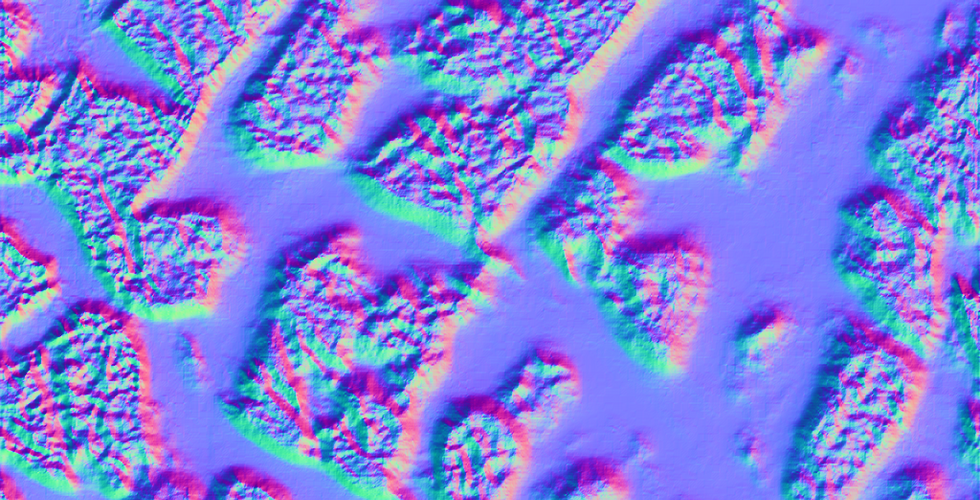

When I was in Adobe Dimension, I found somewhere where you could place a "normal" map, and it would apply a surface texture. In photoshop, you can make these by loading your texture, click Filters > 3D > Generate Normal Map. It then shows you what it will look like once it's applied to a shape, and you can adjust the sharpness of the darks, mids, and highlights separately.

Then, in Dimension, select the Default Material of the object you want to apply the texture to, and then drag and drop it in.

To test this out, I made a few more renders of some low-poly spheres and followed all the steps above with some more textures that were generated by Textures_DTD.

The Textures

Their Respective Normal Maps

I really like the look of the normal maps, the bright colours are very appealing to me. I can assume that the difference in brightness and hue translates into different lighting information in 3D rendering software.

I generated the spheres, applied the textures, then applied their normal maps, and lined them up on the X and Y axis so that the depth and colours matched. I was really happy with the results, the textures really stand out a lot more because of the shading from the normal maps.

I think that this will be useful knowledge to have moving forward, I'm especially excited to see how the PSX shaders interact with normal maps that I make. I am also interested to see what maps come out of the photographs that I take when I do my first shoot.

T R Y I N G O U T A R ( A L T E R N A T E R E A L I T Y )

Since I'm looking at different conceptual ideas, I took a look at a different way of interacting with 3D models, Augmented Reality. I had made a strange sea creature in Dust3D, textured it and applied a Normal map to it, exported it, and loaded it into a very bare-bones AR app, called AR Viewer. What I was really impressed to see was that I could see the details of the Normal maps so clearly, seeing it in a 3D environment where I can move the camera around freely adds another layer of depth, as you tilting the camera around affects the way the light bounces off the Normal map.

Random Renders of the Sea Creature

This is a really cool idea and I'd like to continue working with AR at some point, even if it's just for a more fun way of looking at the 3D models I make. I personally think that for the end product, VR is still the way forward. With AR, you can only really view and tap on the 3D object, but in VR you have so many more options for what the player can do. Plus, looking at something through a phone and seeing it through a headset is completely different experience-wise.

S T E N C I L T E X T U R I N G

From this video, I learned a really easy way to texture objects. The way stencil texturing works is by having the texture as an overlay, that you can click and paint it on. You can also change the blend settings, so you can also use the lighter or darker parts of the texture. I found this process very easy, and I enjoyed being the painter and having full direction of where each texture goes. This process would be a ton easier than what I was planning to do. Stencil texturing uses the 3D model so you can fully visualise what the final texture will be, but I planned to use the UV map and apply the textures using Photoshop. Stenciling takes that idea and simplifies it, making it so you don't have to stare at the UV map and figure out which bit is what bit on the 3D model. I also enjoy the style that this method brings as well, some sections are different resolutions because the texture that was applied was resized, and it is further reminiscent of the PS1 3D models. Like I've said before, the cognitive dissonance that you feel in combination with the simulated physics of the game engine is enough to make someone feel like they are fully immersed, so the textures and models don't necessarily have to be too realistic. My thought is that the audience will have their disbelief suspended by having their perspectives are thrown into the world I put together.

The Process

The Textures I Used (From Textures_DTD)

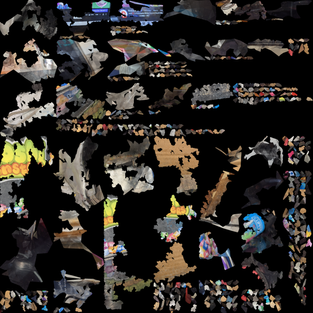

The Completed UV Map

Renders of the Model

C R E A T I N G A N E N V I R O N M E N T

Since I am aiming to create an illusion of reality, I felt as if I needed to create a space that felt familiar to virtually everyone that this experience can reach. I think I should create the mundane, the boring everyday settings that a lot of people have been in, somewhere where someone could say "yeah, I've been here". By doing this, the person that is going through this experience will be able to relate to their past experiences of being in a similar area, making them comfortable and familiar to their 3D generated surroundings.

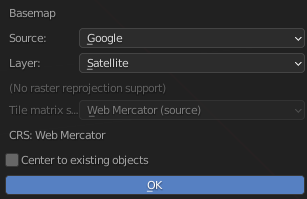

I have found a couple of ways that I could do this, both operate by generating a 3D model of a map, which we can later use as the ground and buildings, which we can then texture and give Normal maps to. The first way is by using a Blender addon called "Blender GIS" to retrieve the 3D information. The video below explains how everything works.

The fact that (once you get past the elaborate setup) the tutorial makes it so easy just to take any slice of the Earth (according to Google) and observe it in 3D modelling software is really impressive to me. Saying that however, when I did some tests of my own, the results were pretty disappointing. I followed the tutorial, and I picked an area that is very familiar to me, the town where I went to secondary school. In the video, he uses London, which has a lot of information for buildings, rivers, roads, etc., but where I picked doesn't seem to have that as much. Instead of looking like a city or a town, it more looks like some random polygons over a map.

The Process

Final Renders

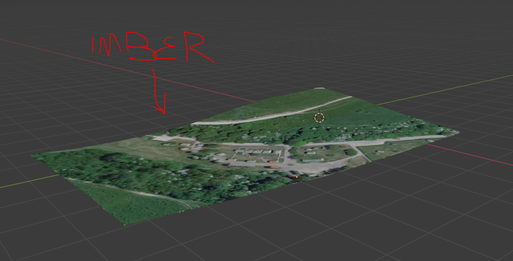

Since these results didn't exactly remind me of home, I tried the second method I found, which looks a lot more promising. This one was far more difficult to get the hang of, and at one point I found out that the reason why I couldn't get it to work was because a piece of software I was using was too updated, and I had to find an older version. The software I used is called RenderDoc, which works in tandem with a Blender addon called MapsModelImporter. Once I figured everything out, it was pretty easy to get into a flow, and I made a few slices of the map.

The Process

I noticed that the further back you are in Google Maps, the more amorphous the 3D model gets and the lower resolution the texture gets. This is most likely so that they can render as much as possible without putting too much strain on the browser. However, when you export the 3D data, you can go in and see all the corners that were cut to make it faster. I took another slice of the local leisure center's car park at the furthest I could zoom in to see the highest amount of detail that you could achieve, and it's just good enough so that you can make out the text, shadows, objects, and textures. It's quite interesting to see how it compresses the data, and it's also very strange to see a place that I've known for so long in such an alien light. I picked an area I knew for these tests because I wanted to know if I could use the 3D data and have it trigger certain memories, and it did. When I was roaming around in my virtual hometown, navigating became easier and I recognised all the buildings that were there, and what I was doing when I was there. I find this very interesting, as it is as if I am looking at postcards of where I live - giving me nostalgia for a place that does exist, using a thing that doesn't.

Final Renders

Practically, I think that using RenderDoc and MapsModelImporter moving forward would be in my best interest. Since I don't think you'll have much need to move around too much when you're in the experience, so zooming in as much as I can to attain as much detail as I can and restricting the movement area with fog or an invisible wall would be perfect for what I want to achieve. And since your movement will be bound to a certain area, all the surroundings will mostly be in the background, meaning they don't have to be too perfect or realistic to pass as an environment. Also, this way, I can import the 3D data into Blender and clean up the models or paint on it myself with my own textures using stencil texturing. Exciting stuff!

While working on this, I was reminded of a couple of things. Firstly, I was messing around in Google Maps right at the beginning of lockdown, and I was taking screenshots of my hometown in a similar manner to the above renders. I was basing it off of landscape photography and edited it to look like it was shot in black and white with a red filter on. Weirdly, these have a lot more detail in them, so I might look further into why that is when I pick the locations in the future.

It also reminded me of Jon Rafman's 9 Eyes work using Google Maps. We're both doing very similar things, using the utilitarian images of Google and reappropriating, reframing, and repurposing them (with slight inspiration from Sublime art). There are differences though, he takes screenshots and I take the 3D data (obviously), and where he looks for the weird and is inspired by the sublime, I'm looking for the mundane, and the unordinary. It's a lot easier to find what I'm looking for with Google Maps I've found.

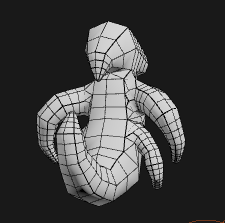

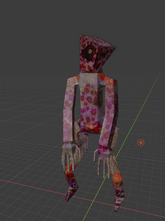

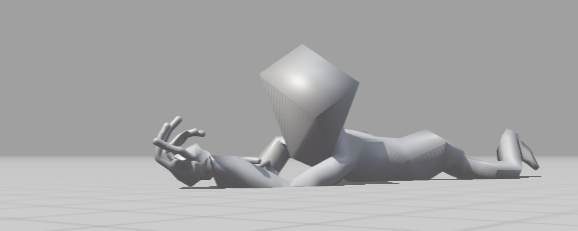

M A K I N G A P L A Y A B L E C H A R A C T E R T O T E S T A N I M A T I N G

Since I was on a bit of a 3D modelling kick, I made a player character. I think that having a character that you play as is fairly important, as it allows you to again feel as if you are controlling a being, having your real-life decisions have consequences in the virtual world. I think that his works especially well for my case, as there is no story to go along with it, so the player character is a shell for you to fill in yourself. I textured them again with Textures_DTD images, but I will most likely go in again and re-texture with the photographs that I take. For now though, the generated ones work great as placeholders. I accentuated the controllable golem character by picking very rocky and jagged textures, and gave them a very nonplussed blank face. I went with the bipedal form because I think it would take a lot less getting used to seeing a body and two legs beneath you in the first person than with any other form. Also, I gave them weird little hands with two appendages to emulate a hand, which could be animated to grab other objects which I can map to the buttons on the VR controllers for visual feedback. The other reason why I made them a biped is that Adobe's Mixamo requires your models to be that way so they can animate them properly.

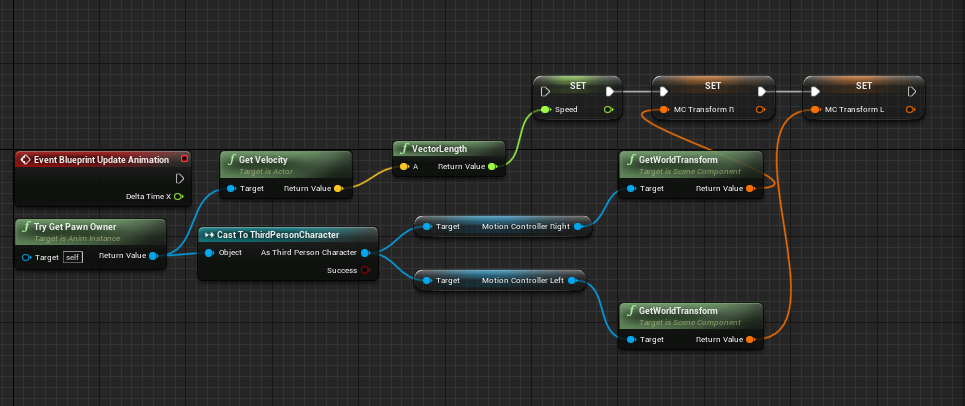

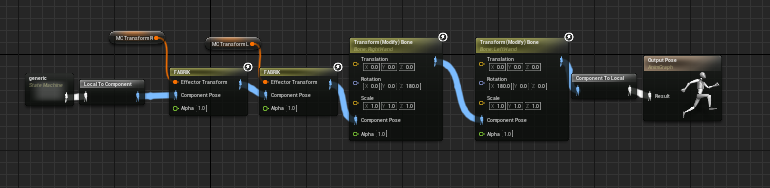

The Making Of

I imported the model into Mixamo, and the rest was so easy. It just asks you to place markers over specific parts of the body (so it knows how to compile the skeleton) and that's it. After that, there are tons of free animations for you to sift through and pick, and you can view your model performing in the window on the side. You then just download each animation as a .fbx file and then you're good to import and use them. I downloaded a basic locomotion set with walking, turning, jumping, etc. just to test out how to assign animation in Unreal Engine, and also because I just wanted to see the little guy bust a move. This was also the first time that I had tested anything in Unreal. I was scared at first, and I still kind of am, but after following a few tutorials I realised that everything can be quite intuitive and as long as I follow the videos exactly then I know nothing will go wrong. The only problem with only following YouTube tutorials is that not everyone does things the same way, so different elements could get in the way of each other and cause a bunch of errors. That's why I picked Unreal Engine 4 though, because of their easy to use Blueprint system. It's just a bunch of nodes that you drag and drop, you don't have to do a lick of coding at all if you don't want to. This system is so easy to use that following the tutorials is a breeze, and because there aren't really multiple ways to do things, I don't foresee anything causing the game to malfunction.

The Simple Animation Process

I followed this tutorial and came out with something that I'm very pleased with. Sure, it's just a simple walking animation and that's it really, but to me, it's one step closer to my goal. I wouldn't say that it got me "used to" the animation process, as the video was 14 minutes of pure mayhem and wasn't as easy out of the gate as I was expecting. I had to watch the video through a few times though, as I got things wrong a few times. That repetition however did teach me a few things, and I could say that I might be able to get at least halfway there before having to look at the video again. It's fine though, as long as the videos stay up, I can watch them as many times as I want. I wasn't too fussed about this being a little difficult, as it was just a proof of concept. Like I said earlier, I want to rig this dude up to a VR setup.

The Result

To do this, I'll be following this tutorial using the same 3D model. I'm doing this because I think that having a body underneath you is a comforting feeling, as no one likes to be a floating head. A game that I played that did this was Boneworks, and even though I could tell that it wasn't actually my body and the legs were moving on their own based on the character's movement, it did help to trick myself into thinking that it was there (a sort of 3D placeholder).When you would fall in the game, it made it feel a lot worse as you saw your body underneath you react. It's a lot like the psychological phenomenon where if you get something to stand in for your body and your brain is sufficiently tricked into thinking it is actually your body, then you can do things to the stand-in object and elicit a response from that person. I first saw this in a Rhett and Link video where they try it with a VR headset and a mannequin. It's the same thing, except the VR headset donning mannequin is a 3D object in the digital realm with a player camera fixed on its head and set to rotate along with your headset.

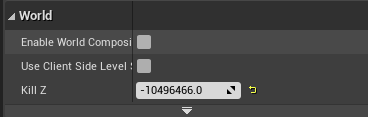

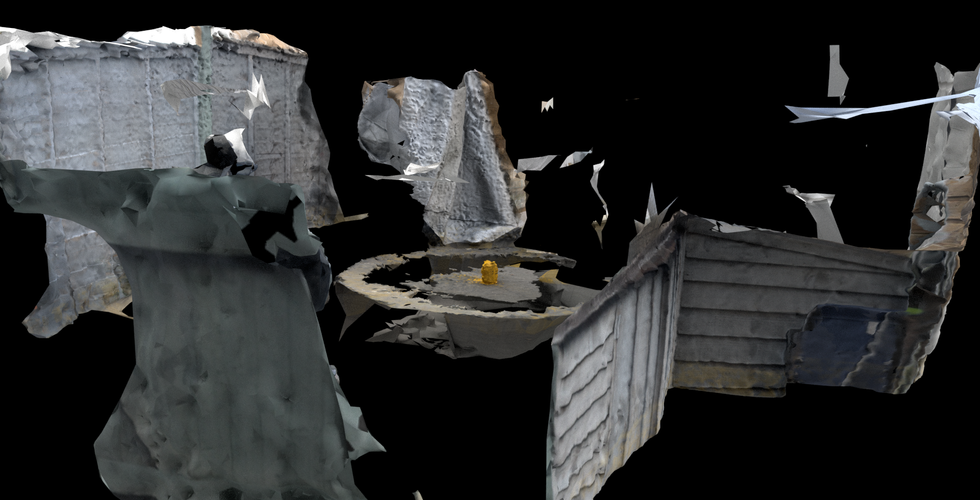

T U R N I N G M A P S I N T O L E V E L S

Next, I wanted to see what would happen when I tried to import the 3D Google Maps files into unreal and use them as terrain for the player to walk on. This proved difficult at first, but I found out (yes, all by myself) that Unreal has two ways of calculating collisions. There are options for a Simple collision map, and a Complex one. The Simple collision map makes an average of the overall shape, and with a mesh as complicated as these, it basically had a forcefield over the entire object that my playable character couldn't get to. To fix this, I changed the Collision Complexity to "use complex collision as simple", and my player character could run around as if it was there in real life. For some reason, the maps were exported really tiny, so I upscaled them in Blender before importing into Unreal. Also, I noticed that my character was disappearing when I ventured too far away, to fix that I decreased the "Kill Z" parameter which is put in place to kill the player if it falls out of the map somehow. I just whacked it all the way down for now.

Putting it all Together

Testing out Map #1, Low Detail

Testing out Map #4, High Detail

With these tests, I can tell that everything is coming together. Now that I have something that my placeholder character can run around in, all I need to do now is have a VR playable character and I can put it on the map and have it work perfectly. I want to play around with getting more detail in the map that I render from Google, because I enjoyed the environments where I could make out objects more than the environments that were made up of vague polygons and colours. I think that it would take the human recognition quite far I think if people could tell where they are. I attempted to make the environments a similar scale to their real life counterpart, using the player model as reference (assuming it's the same size as the average human). I did this by eye, but I think I got accurate results. It would be a lot easier to tell in first person with a VR headset on though I think.

Speaking of, I won't be able to do any of the testing when I make the VR rig, as I am home for Christmas, meaning the Oculus Quest is with it's owner. I plan on doing as many myself as I can, but any test builds that require a VR headset or the controllers, I will send to him to try out for me. I'll ask him to screen record it too, so we can see the results for ourselves. I'll ask him to add in a webcam in the corner too, so you can see him move and how the model moves in parallel. It's up to him whether he wants that or not though. I may potentially be able to, but it still remains to be seen. I've asked for a VR headset that I can clip my phone into, and there is software to trick your PC into thinking your phone is an actual headset, allowing you to play games, go on Steam VR, or Desktop VR. The only thing I'm worried about is that the mobile phone headset doesn't come with any controllers, so I don't think I'll be able to do anything with it except just look around. We'll see when the time comes.

B U Y I N G A N D T E S T I N G O U T P S X F X

Now is the time! I'm a little reluctant to purchase this but I think that I will be so pleased with the results that I'll forget about the price tag. Plus, I saw that the developer of PSXFX updated it 6 days ago (as of writing this), which means that it is constantly updated, so I can use it for future projects as well. Also, as of right now, the developer put the pack on sale for 25% off, making it $24.54 (£18.22). The stars must be aligning!

So, it's been several hours later, and I'm stumped. The only tutorial I found was from the dude that made it, and all he said was to drag the PSXFX folder from the download into your project file. I've figured out that if you import a few blueprints then the default settings appear and everything looks lower resolution and there is a slight dithering effect. However, when I try and move some of the parameters around, nothing happens. Also, the vertex snapping, fog, draw distance. and all the other things don't work either. I was trying this on the project with the player character I made, and when I saw it wasn't working there I tried opening a blank project and trying it there. Again, I wasn't successful. I opened the PSXFX project demo and everything was working perfectly but I couldn't figure out what the difference was. I was pulling my hair out, and the only thing that seemed like it was going to be of any use was the discord server that the developer set up. I'm not really the best at social interactions so I was a little reluctant to join, but with my head held high I clicked the link in the name of art.

After one polite welcome message from Marcis himself, I ventured to the help section, and found someone with a similar issue to mine. It seems that we can see the dithering and the lowered resolution because those are post-processed effects, whereas everything else is powered by other things, like the vertex snapping being a function for materials. I went back into my project to see if I could do anything with this new knowledge.

What's also cool is the showcase section on Marcis's discord, where people who have bought the asset pack can show other developers the stuff they're working on. I found plenty of screenshots that I can use for inspiration, mostly so I can compare effects levels to make sure I get them as accurate/subtle as the people who know what they're doing. Maybe once I'm done I can put my project on there and see what they think!

B U Y I N G A N D T E S T I N G V R I D G E

I have wanted to make this a VR experience since the beginning, so finally being able to test it out finally will help me be able to visualise where this project will be able to go afterwards. I followed a tutorial on this video that shows me how to turn a 3D model into a VR controllable character. I made this all without a way to test it, and I sent it to my friend with the actual VR headset but I never recieved anything back in that regard. I needed to take matters into my own hands.

The Texturing, Animating, Rigging, and Programming of the VR Model

UV Map

Since I wasn't able to do any of the VR testing on my own and I needed to do it sooner rather than later, I sought out options that helped me test it out with the things I have already. There is a software out there called VRidge by RiftCat, which takes your phone and makes the computer think that it is a high end VR headset like an Oculus Rift of an HTC Vive. Rather annoyingly, the free version of the software only allowed you to use it for ten minutes a day, which would be useless to me. I'm finding it appropriate that this body of work is somewhat inspired by Vaporwave art, because I too grow tiresome of capitalist bullshit making me pay to make art. Howerver upset I was about it though, I caved just like every other human being and paid for it anyway. I recieved a little VR headset that you can slide your phone into for Christmas (which I asked for specifically so I could test my project out), and this seemed to be the only option for me to test it all out without having to rely on other people to do the tests for me, so as reluctant as I was I went for it anyway.

Initially, I found the stream between PC monitor and my phone to be very low quality and laggy. You can adjust the bitrate slider to determine how much information is sent to your phone per second, but if it was anything above 1kbps it was pretty unusable. 1kbps provided a smooth video feed, but it was so low resolution that you could rarely make anything out.

Screen Recording of my Phone Showing 1KBPS Streaming Between Phone and PC

As Above but with 50KBPS

I had found an article on the RiftCat forum that was asking the same question as me - how do I get this to actually work nicely? Luckily, an employee of RiftCat came to save the day, suggesting that we use a wired connection using USB Tethering because that is a more direct feed of information, allowing a higher bitrate than what is available through a WiFi connection. I had found a cable, and wanted to test the quick VR demo I had made using the little character.

VR Test Demo with 1KBPS

VR Demo with Max Bitrate Per Second

The turning in place works great, the camera tracking the VR headset works great, but the programmed movement is a little erratic and the tracking for the hands don't work (since I don't have controllers, and the only way to have them emulated with the method I chose to test VR is to buy a £4 app on two separate phones so VRidge can sense them as controllers). But, they are in the default position which tells me that they are ready to work, they just aren't recieving any inputs. All this aside though, it works! and it doesn't look too bad doing so either! I really like the idea of having this project being as accessible to anyone, so even if you didn't have a high end VR headset device you could still have the experience. I'm yet to find it though, as VRidge makes you pay if you want to spend more than 10 minutes playing with it per day.

Also, you might have noticed that the character's arms were jutted in front of it. I think this is to do with the fact that the arms are looking for inputs from motion contr0llers, but because I don't have any, they are resetting to a default position. RiftCat sell an app that you can buy for a further £4 that can turn a separate phone into a motion controller, but then I would need two spare phones just in order to try it. Unfortunately, with my intractability idea for this game being thwarted, I'm going to have to scrap the idea for an in game camera for now. Unless I pay another £4 and ask my mum and brother to borrow their phones every time I want to try it out, of course.

L O O K I N G A T S O M E W O R K B Y D O M I N I C H A W G O O D

One practitioner I really think has been valuable in my research so far is Dominic Hawgood. I’ve particularly enjoyed his psychedelic-fueled digital work Casting Out The Self. This work takes all of the disciplines that he is under and mashes them all into one, making a site specific installation. He uses a lot of CG and 3D Modelling in parallel with his photography, bridging the gap between the digital and the ethereal. While I am all for the psychonaut movement, this work mostly speaks to me because of how the work was made, and the experience it creates as you view it. It takes the digital world which is often seen as cold and clinical but it makes it appear mystical. Hawgood draws a lot of inspiration from scientific research, especially in the techniques that they use to operate the machines. For him, it’s the transfer between real life and digital (through photogrammetry mostly). A quote from Hawgood reads: “You can learn about all kinds of imagining techniques and you find out you’re hacking your perceptual system, creating illusions and deconstructing the world around you”.

Works From "Casting out the Self"

I have tried photogrammetry previously using VisualSFM, but I attempted to push it to its limits without really knowing the full extent of the software in the first place. I feel like trying it again, since I like the implications of turning photographs into interactable versions of themselves, and I also think it would save a lot of time on making the objects themselves, instead of me having to make them all vertex by vertex in Blender.

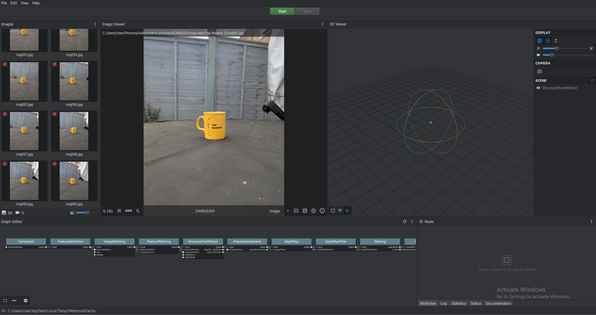

T R Y I N G O U T P H O T O G R A M M E T R Y U S I N G T E S T P H O T O S

To test photogrammetry properly this time, I employed the use of an app called SCANN3D, which I've used before. Since it's an app, the application has to be a relatively small file size (just like the games from the 80s & 90s), which could potentially mean that they had to take some shortcuts in how the 3D objects are rendered and formed, so it could be cool to see the differenced between the app and some PC software that does the same thing. As for which objects I'd like to use, I think I'm going to keep it to every-day objects that one could find in their house. Again, I'm going for an effect where people feel normal in their surroundings, so I feel as if that would be the best choice. Plus, things around my house are the only things that I can get my hands on at the moment due to covid. I took a lot of inspiration of things to make into 3D objects from Hawgood, since he used regular items in his work, like empty glass bottles, books, a keyboard and mouse, and a smoking pipe.

I went around room to room picking up objects that I felt would be simple to scan in and easy for the app to understand and render properly. So nothing with too many corners and edges, and nothing too transparent. I put up a white sheet outside behind the objects to shoot them so that there was no background information getting in the way, and I got shooting. Luckily, the day I chose to shoot these kind of overcast, so the outdoor lighting was nice and flat and even. The app asks you to set your object up and rotate yourself around it so you can take pictures of it from all sides, but instead I just rotated the object itself on the sheet to achieve the same thing. The only thing that I wasn't looking forward to in this shoot was the fact that it was horribly cold outside.

The shoot was... weird. My first object was my Top Banana mug. I attempted to construct a makeshift infinity table using a garden chair and a bedsheet, but the app told me that it didn't work. I tried again by actually circling the mug, but that image set didn't work either. I tried one more time by placing the mug in the centre of a circular table and walking around that, and it worked... sort of.

Top Banana Mug Image Set 1 (Didn't Work)

Top Banana Mug Image Set 2 (Also Didn't Work)

Top Banana Mug Image Set 3

Thumbnail Generated by SCANN3D

Final Renders of .obj in Adobe Dimension

As you can see, it didn't just scan in the mug, but the table, and everything else in the background as well. It looks super cool, except it is going to be a little difficult to incorporate these as objects to look at in the experience I'm making. I think that this is because all the objects I picked to scan were a little small, so when the app was looking for information to make up the object, it took to the background instead. I could still use them in the game perhaps, as even though it's an "unrealistic" rendering of real life, it is still represented and you can still make out the objects in question. The objects also look a lot like some alien terrain, so playing with scale here could be interesting. I feel like that takes what I've been looking at and reverses it, where it takes something that you're used to and instead of having it look different but feel the same it makes it feel unusual, which could induce some unsettling feelings. I think that having the two contrast each other in the same piece. Some may say that it clouds the meaning and it could be confusing but I feel that the only way to know that you feel something is to feel something that contrasts that feeling in the same space/time. Once you feel one way, you can identify that feeling stronger once you've felt the opposite. So if you start in an area that you are used to but move to one you aren't, you will notice that you felt normal in the first place because you feel that normalcy leaving.

Also, just a side note, in order to export these .obj files, I had to "buy" a month's subscription to SCANN3D. I say buy in quotation marks because they had a 3 day free trial. If I wanted to carry on with it though, it would've been £5.49 a month.. I must say, I think that charging in order to export is a little over the top and I was a little annoyed that I had to even put my card details in. In hindsight, I could have looked for some free alternate photogrammetry apps, but this one seemed to be the most prestigious.

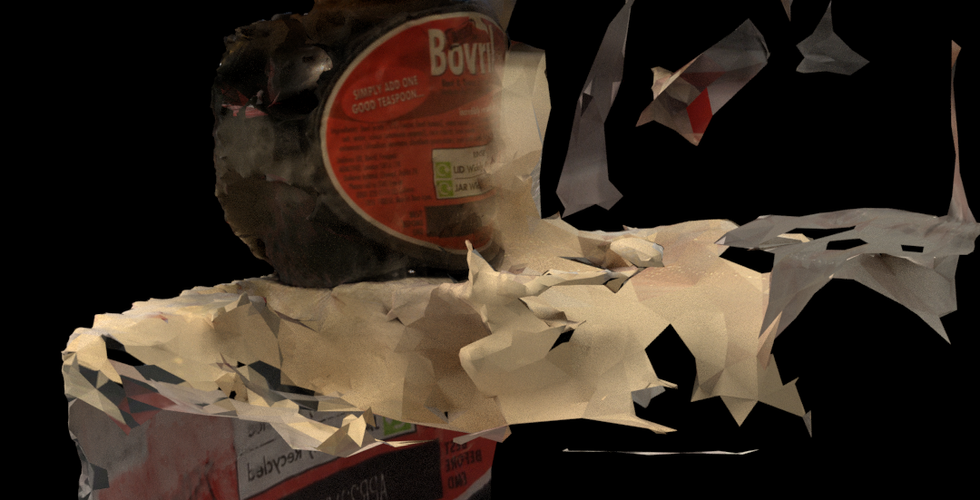

Aspall Bottle Image Set

Thumbnail

Renders

Bovril Image Set

Thumbnail

Renders

Stapler Image Set

Thumbnail

Renders

So I'm in a bit of a state of confusion here, as I didn't get what I was looking for with these experiments, but what I have has a lot of potential. That being said, I still don't have actual things that resemble objects, just things that elude to objects with signifiers of the backgrounds that I did the shoots in. I've tried another piece of software earlier in this project called VisualSFM, and I feel that I would get some "better" results on a PC application rather than a phone one.

I decided to test VisualSFM out one more time, using the image sets that I got in SCANN3D. I could also use brand new image sets, but SCANN3D has an interface when taking the images that helps you take images that can be stitched together properly easier for the user.

I think I misunderstood what VisualSFM does. In fact, I have no idea what it is used for. I thought it generated a 3D mesh, but instead it seems to create a bunch of vertices floating in 3D space. It also exports as the file type .nvm, which (to my knowledge), you can't import into Blender. I went back to the VisualSFM website for answers but all the info there is just what it does and how to used it, not any things to use if for. I think I was barking up the wrong tree with this software the whole time.

While reading a bit about different photogrammetry softwares, I came across several that had outputs similar to VisualSFM, where rather than a polygon based mesh, it's a bit like a three dimensional pointillist painting. It turns out that that's called a "dense point mesh", which are used to measure external points on external surfaces or objects. With some better software (or perhaps a higher quantity of reference images), I could have point clouds that look like this:

Geo-referenced point cloud of Red Rocks, Colorado (by DroneMapper)

Example from sofware called MicMac

An example of a 1.2 billion data point cloud render of Beit Ghazaleh, a heritage site in danger in Aleppo (Syria)

(It looks super compressed here because of the Wix filesize limit, but I would look this project up, it's pretty cool!)

Reading even further on it, I found out that that's how most (if not all) photogrammetry software works. It finds similar points between the image sets, then calculates the distance between them. Then, it takes each point and generates edges and faces between them, resulting in a final mesh. What we were seeing in VisualSFM was just the moment before we turned it into a 3D mesh. That wasn't something that you could do with that software though, so maybe I should try some new ones. Another thing that I learned was that photogrammetry software is supposed to render the background of the images as well, so if you wanted to isolate the object in question, you can do so yourself by using Blender or other 3D modelling software.

With this new knowledge, I uninstalled VisualSFM and tried a few other (free) options. I was again frustrated to see that the software with the most immaculate results are paid services. Again, my art is put on hold due to capitalism.

REGARD3D

This software was pretty intuitive, all you need to do is upload the image sets, then just proceed through the next steps. All parameters have a default that you don't have to mess around with if you don't want to. I found that just leaving them alone gave me pretty reliable results, most changes made me wait over an hour for anything at all. I processed the top banana mug and the stapler image sets, and they both came out with really strange results.

The Process

The results are far more bizarre. the surface looks like an undulating lava lamp with dull earthy tones projected onto it. I tried it again with the stapler image set and I received an equally strange object. I exported them as .obj files and imported them into Dimension to do some artsy renders, and I found out that it doesn't export them with the images, and it doesn't look like there's an option to do so either. I just made them appear metallic and made some renders that are reminiscent of Edgerton's Milk Drop.

Renders of the Top Banana Mug

Renders of the Stapler

Like I said earlier, it would be interesting to include these, but they seem a lot more like interpretive sculptures rather than the things that they are meant to be. At least with the objects created with SCANN3D has all the signifiers of what the object is in the images that I provided, so where there are photographs of things we can tell what it was supposed to be. This seems a lot more cryptic. Again though, I could make some ambient background pieces with this just to help set the tone. I could bring one of these into Blender and texture it to look like foliage or rocks or something like that and use it as scenery.

There are examples of Regard3D actually working really well, so I've begun to realise that maybe the issue is lying with the image sets I'm providing. I'll try another shoot in the future, in the meantime I'm going to try a different software.

MESHROOM

Meshroom is amazing! It's incredibly easy to use, all you have to do is upload you image set, and hit go. It takes a little while to get your final 3D object, but it is so worth it in the end. I really like the look of the UI as well, it looks like it could be an Adobe software.

The Process

Renders

I was blown away with how accurate it was. I could make out the entire layout of my garden just by looking at the point cloud. It even got the distances between everything right, it's like looking down at it from my bedroom window. The entire mesh of the object is a little messy, but if you look at render number 3 and 4, it's actually generated a 3D model in the centre of the table. All I would have to do is make a plane cut beneath the mug and delete everything that isn't the thing I want to keep. Don't get me wrong, it doesn't really look like a mug whatsoever, but these are just tests. I can do another shoot where I give this software a higher count image set where I get in and give it more detail. Impressed, I continued using all the other image sets I made with SCANN3D and put them through.

Renders of the Aspall Bottle Model

Renders of the Bovril Model

Renders of the Stapler Model

I would really like to use this software again, as it has given us the best results so far, and for completely free, too. I think that we will be able to create some much better results if we gave it an image set with more images in. With that, I can do like I described earlier and actually make some pretty decent objects.

To test out the full functionality of Meshroom, I decided to do an image set of my desk. It's messy, but don't judge. I did my best to capture as much detail and as many angles as I could all while trying to maintain similar reference points in each image. The lighting was not ideal, just a horribly bright lightbulb giving off a warm light, but to remedy that I used the flash on my phone. 225 images later, I imported them into Meshroom and clicked start.

Renders

Generated UV Maps